How to Set Up Oracle Database Tables Replication in Minutes

Bis dat qui cito dat

In recent years, the topic of migration from Oracle Database to other systems has been very popular: Oracle Database Enterprise Edition is expensive, the danger of vendor lock in, and much more makes you think about it. But Oracle Database migration to other relational databases, is a large and complex project, reducing the total cost of ownership, but usually database migration to other platform is not limited to just migrating data, but also requires changing or replacing end-user applications, which increases the risk, complexity and budget of the migration project. Another thing to consider before migrating an Oracle database to another relational database is that the Oracle database handles transactional workloads very well and guarantees unparalleled data security. But real systems are not only transactional loads, but also analytical ones, and CDC in near-real time mode can help here , and we can leave Oracle Database as a purpose-built database for transactional load and take out analytical and reporting operations into other relational database management systems, or implement event-driven architecture, and this will really help to reduce the TCO and reduce the risks of new technologies. Below we will look at an example of how to quickly set up an Oracle database for replication in PostgreSQL without using Oracle Golden Gate (for Oracle Golden Gate list price please see pp4 in Oracle Technology Global Price List). The main thing in this example is ease of use and readiness for rapid implementation in modern data processing pipelines and event-driven architecture.

High Level overview

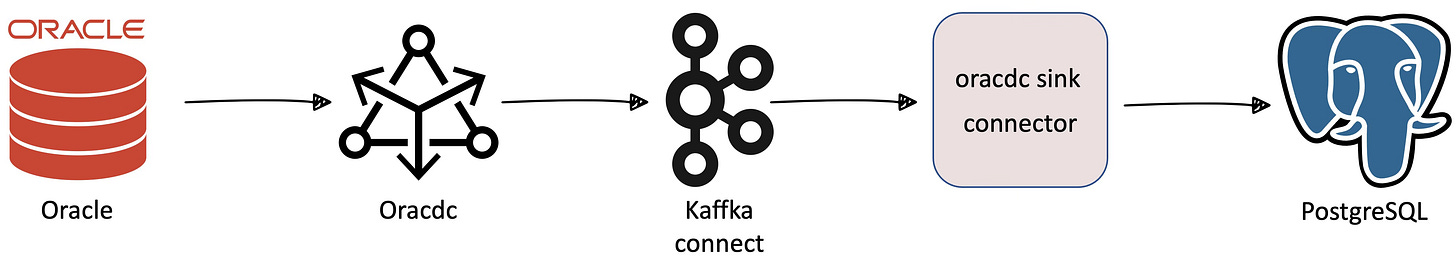

We will use the Kafka Connect ecosystem and our oracdc bundle of connectors to deliver data from Oracle to Postgres. You can see the data flow in the diagram below

Pre-requisites

Docker containers

The example uses Docker containers to simplify the deployment process, ensuring fast and consistent deployment of applications regardless of the environment: cloud or on-premise. We only need two images hosted on dockerhub: one for the Apache Kafka runtime and one for building data pipeline between the Oracle Database and PostgreSQL using Kafka Connect.

docker pull bitnami/kafka:latest

docker pull averemee/oracdc:latestOracle Database Settings

Oracle Database ARCHIVELOG mode

Using CDC assumes that your database is running in ARCHIVELOG mode. To verify, log in to the Oracle Database as SYSDBA and check the result of query below

select LOG_MODE from V$DATABASE;If the query returns ARCHIVELOG, it is enabled, otherwise (the query returned NOARCHIVELOG) - enable ARCHIVELOG mode using below code snippet

shutdown immediate

startup mount

alter database archivelog;

alter database open;Oracle Database Supplemental Logging

Oracle Database supplemental logging simply means that all columns or selected columns of table are specified for extra logging. CDC Replication requires supplemental logging. To verify supplemental logging settings at database level log in to the Oracle Database as SYSDBA and execute

select SUPPLEMENTAL_LOG_DATA_MIN, SUPPLEMENTAL_LOG_DATA_ALL

from V$DATABASE;If this query returned YES for SUPPLEMENTAL_LOG_DATA_ALL then you do not need to make any additional settings. If this query returns NO and you are in CDB environment query the ALL_COLUMN column in the DBA_SUPPLEMENTAL_LOGGING view for each PDB in the CDB to see whether all column supplemental logging is enabled in the PDB. If this request returned NO for SUPPLEMENTAL_LOG_DATA_ALL column, but this query returned YES or IMPLICIT for SUPPLEMENTAL_LOG_DATA_MIN column you can configure table-level supplemental logging for the tables participating in CDC/replication using the command below

alter table [<OWNER>.]<TABLE_NAME> add supplemental log data (ALL) columns; If this query returned NO for both columns, you need to set it for the entire database using

alter database add supplemental log data (ALL) columns;or to minimise the impact of supplemental logging, you need to set the minimal logging level for the database using

alter database add supplemental log data;and then set it for the tables participating in CDC/replication using

alter table [<OWNER>.]<TABLE_NAME> add supplemental log data (ALL) columns; Unprivileged Oracle Database account for running LogMiner

Using Oracle’s Database SYSDBA rights is not an example to follow and you should always follow the principle of leat privilege.

Example commands for creating such a account in CDB environment

create user C##ORACDC identified by oracdc

default tablespace SYSAUX

temporary tablespace TEMP

quota unlimited on SYSAUX

CONTAINER=ALL;

alter user C##ORACDC SET CONTAINER_DATA=ALL CONTAINER=CURRENT;

grant

CREATE SESSION,

SET CONTAINER,

SELECT ANY TRANSACTION,

SELECT ANY DICTIONARY,

EXECUTE_CATALOG_ROLE,

LOGMINING

to C##ORACDC

CONTAINER=ALL;In CDB environment with connection to PDB (Oracle Database 19.10+ feature)

create user ORACDC identified by oracdc

default tablespace SYSAUX

temporary tablespace TEMP

quota unlimited on SYSAUX;

grant

CREATE SESSION,

SELECT ANY TRANSACTION,

SELECT ANY DICTIONARY,

EXECUTE_CATALOG_ROLE,

LOGMINING

to C##ORACDC;For the Oracle Database 10g/11g

create user ORACDC identified by oracdc

default tablespace SYSAUX

temporary tablespace TEMP

quota unlimited on SYSAUX;

grant

CREATE SESSION,

SELECT ANY TRANSACTION,

SELECT ANY DICTIONARY,

EXECUTE_CATALOG_ROLE

to C##ORACDC;

grant execute on DBMS_LOGMNR to ORACDC;Setup check utility

Since this - Oracle Database pre-requisites check - is the main part of our story, we need to check what we have done in the previous steps. To do this, we need to run the verification utility by passing it the JDBC URL pointing to the Oracle Database and the name and password of the account we created

docker run --rm -it averemee/oracdc oraCheck.sh \

--jdbc-url <ORACLE-JDBC-URL> --user <ACCOUNT-TO-RUN-CONNECTOR> --password <ACCOUNT-PASSWORD>

If successful, the utility will output to the terminal

=====================

The oracdc's setup check was completed successfully, everything is ready to start oracdc connector

=====================

otherwise it will tell you what needs to be corrected in the settings of the Oracle Database.

PostgreSQL Database

We will need a PostgresSQL database into which we will insert data - there are no specific requirements for it.

Configuration steps

Oracle Database

We need data from the database, and if we don't have test data at hand, we can always use data from the SCOTT account, which has been around since the creation of the Oracle database. If you are wondering who SCOTT is and why the password is TIGER, read here. To create SCOTT account, simply run on the $ORACLE_HOME/rdbms/admin/utlsampl.sql using SQL*Plus and then enable supplemental logging for SCOTT’s tables (not required if V$DATABASE.SUPPLEMENTAL_LOG_DATA_ALL is set to YES)

alter table DEPT add supplemental log data (ALL) columns;

alter table EMP add supplemental log data (ALL) columns;

alter table BONUS add supplemental log data (ALL) columns;

alter table SALGRADE add supplemental log data (ALL) columns;Docker

For optimal operation of all containers, we need to create two directories in the host OS that will be used by the container

mkdir -p /var/kafka/broker

mkdir -p /var/kafka/nonheapThese directories are needed to store

Apache Kafka logs

memory mapped files for storing Oracle Database transactions

respectively.

Following is the docker compose file to run all required docker containers

version: '3'

services:

kafka-broker:

image: bitnami/kafka:latest

container_name: kafka-broker

volumes:

- /var/kafka/broker:/bitnami/kafka

ports:

- 9092

environment:

KAFKA_CFG_NODE_ID: "0"

KAFKA_CFG_PROCESS_ROLES: "controller,broker"

KAFKA_CFG_CONTROLLER_QUORUM_VOTERS: "0@kafka-broker:9093"

KAFKA_KRAFT_CLUSTER_ID: "ryGWk9_ISQ2OxoXJp4NK5Q"

KAFKA_CFG_LISTENERS: "PLAINTEXT://:9092,CONTROLLER://:9093"

KAFKA_CFG_ADVERTISED_LISTENERS: "PLAINTEXT://:9092"

KAFKA_CFG_LISTENER_SECURITY_PROTOCOL_MAP: "CONTROLLER:PLAINTEXT,PLAINTEXT:PLAINTEXT"

KAFKA_CFG_CONTROLLER_LISTENER_NAMES: "CONTROLLER"

KAFKA_CFG_INTER_BROKER_LISTENER_NAME: "PLAINTEXT"

KAFKA_CFG_OFFSETS_TOPIC_REPLICATION_FACTOR: "1"

KAFKA_CFG_TRANSACTION_STATE_LOG_REPLICATION_FACTOR: "1"

KAFKA_CFG_DEFAULT_REPLICATION_FACTOR: "1"

kafka-connect:

image: averemee/oracdc:latest

container_name: kafka-connect

volumes:

- /var/kafka/nonheap:/var/lib/oracdc

depends_on:

- kafka-broker

ports:

- 8083:8083

- 9083:9083

environment:

A2_ORACDC_BOOTSTRAP_SERVERS: "kafka-broker:9092"

A2_ORACDC_GROUP_ID: "connect-cluster"

A2_ORACDC_OFFSET_STORAGE_TOPIC: "connect-offsets"

A2_ORACDC_OFFSET_STORAGE_REPLICATION_FACTOR: "1"

A2_ORACDC_CONFIG_STORAGE_TOPIC: "connect-configs"

A2_ORACDC_CONFIG_STORAGE_REPLICATION_FACTOR: "1"

A2_ORACDC_STATUS_STORAGE_TOPIC: "connect-status"

A2_ORACDC_STATUS_STORAGE_REPLICATION_FACTOR: "1"

Deploy it using docker compose command

docker compose up -dTwo ports are open

8083 - for managing the Kafka Connect via REST API

9083 - to export JMX statistics about the performance and work of the Kafka Connect ecosystem and connectors for further use by Prometheus or other management tools

Register Connectors

We need to create two connectors - Source connector to read data from Oracle Database and send it to Apache Kafka and the second to read data from Apache Kafka topics and send it to PostgreSQL.

Source Connector

Let’s create the json file for source connector, name it as ora-source-test.json

{

"name" : "ora-source-test",

"config" : {

"connector.class" : "solutions.a2.cdc.oracle.OraCdcLogMinerConnector",

"tasks.max" : "1",

"errors.log.enable" : "true",

"errors.log.include.messages" : "true",

"a2.jdbc.url" : "jdbc:oracle:thin:@tcp://<ORACLE_HOST>:<PORT>/<SERVICE_NAME>",

"a2.jdbc.username" : "C##ORACDC",

"a2.jdbc.password" : "oracdc",

"a2.include" : "SCOTT.%",

"a2.tmpdir": "/var/lib/oracdc/chronicle",

"a2.oracdc.schemas" : "true",

"a2.process.online.redo.logs" : "true",

"a2.scn.query.interval.ms" : "5000"

}

}Deploy this connector to Kafka Connect using curl command below

curl -i -X POST -H "Accept:application/json" -H "Content-Type:application/json" http://localhost:8083/connectors/ -d @ora-source-test.jsonSink Connector

Let’s create the json file for source connector, name it as pg-sink-test.json

{

"name" : "pg-sink-test",

"config" : {

"connector.class" : "solutions.a2.kafka.sink.JdbcSinkConnector",

"tasks.max" : "3",

"topics" : "DEPT,EMP,SALGRADE",

"errors.log.enable" : "true",

"errors.log.include.messages" : "true",

"a2.jdbc.url" : "jdbc:postgresql://<PG_HOST>:<PG_PORT>/<PG_DATABASE>",

"a2.jdbc.username" : "<PG_USER>",

"a2.jdbc.password" : "<PG_PASSWORD>",

"a2.autocreate" : "true"

}

}

Deploy this connector to Kafka Connect using curl command below

curl -i -X POST -H "Accept:application/json" -H "Content-Type:application/json" http://localhost:8083/connectors/ -d @pg-sink-test.jsonTest it

Voilà - now is the time to check: insert, update or delete data in Oracle and check how these operations are displayed in PostgreSQL

Summary

I hope that the longest thing was reading this article and downloading containers from dockerub and you were convinced that making a replication prototype is easy and simple.

Feel free to contact me if you have any questions or if you would like to connect with me on LinkedIn - https://www.linkedin.com/in/averemee/